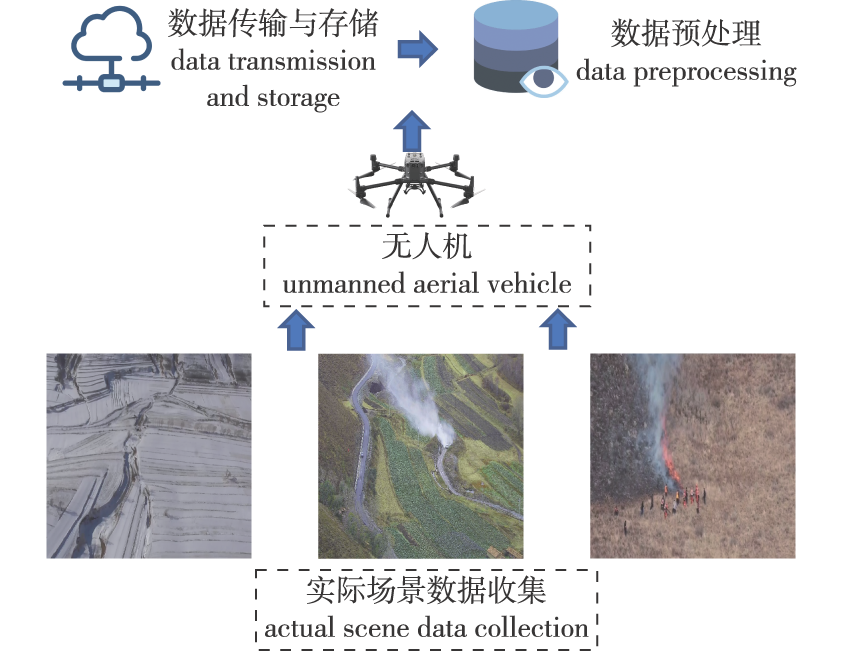

【目的】针对航空林火图像火点目标小、环境复杂等特点,提出一种基于自注意力机制的图像识别方法FireViT,以提高航空林火图像识别的准确率和鲁棒性。【方法】以张家口市崇礼区无人机采集的林火视频为数据源,通过数据预处理构建数据集。然后,选用10层Vision Transformer(ViT)为主干网络,采用交叠滑动窗格方式序列化图像,嵌入位置信息后作为第1层ViT的输入,将前9层ViT提取的区域选择模块通过多头自注意力和多层感知器机制批量嵌入第10层ViT,有效放大子图间的微差异以获取小目标特征。最后,采用对比特征学习策略,构建目标损失函数进行模型预测。为验证模型的有效性,设定训练集、测试集样本比例为8∶2、7∶3、6∶4和4∶6,并与5种经典模型进行识别性能对比。【结果】模型在4种训练集、测试集分配比例下,识别率均能达到100%,且准确率分别为94.82%、95.05%、94.90%和94.80%,平均准确率为94.89%,高于其他5种对比模型,本模型迅速收敛并达到较高准确率,且在后续迭代中准确率保持稳定,具有较强泛化能力;4种分配比例下识别率分别为99.97%、99.89%、99.80%、99.77%,高于其余5种模型。【结论】本研究模型采用自注意力机制与弱监督学习相结合方法,挖掘不同环境下航空林火图像局部特征差异,具有较好泛化能力和鲁棒性,可为提高火情、火险应对处置能力和效率以及预防森林火灾发生提供重要依据。

【Objective】This study aims to address the challenges of small fire point targets and complex environments in aerial forest fire images, we propose FireViT, a self-attention-based image recognition method. 【Method】This method aims to enhance the accuracy and robustness of aerial forest fire image recognition. We used forest fire videos collected by drones in Chongli District, Zhangjiakou City, to construct a dataset through data preprocessing. A 10-layer vision transformer (ViT) was selected as the backbone network. Images were serialized using overlapping sliding windows, with embedded positional information fed into the first layer of ViT. The region selection modules, extracted from the preceding nine layers of ViT, were integrated into the tenth layer through multi-head self-attention and multi-layer perceptron mechanisms. This effectively amplified minor differences between subgraphs to capture features of small targets. Finally, a contrastive feature learning strategy was employed to construct an objective loss function for model prediction. We validated the model’s effectiveness by establishing training and testing sets with sample ratios of 8∶2, 7∶3, 6∶4, and 4∶6, and compared its performance with five classical models.【Result】With the allocation ratio of four training and test sets, the model achieved a recognition rate of 100% and accuracy of 94.82%, 95.05%, 94.90%, and 94.80%, respectively, with an average accuracy of 94.89%. This performance surpassed that of the five comparison models. The model converged rapidly, maintained a high recognition accuracy rate, and demonstrated stability in subsequent iterations. It showed strong generalization ability. The recognition rates were 99.97%, 99.89%, 99.80% and 99.77%, also higher than the five comparison models.【Conclusion】This research employed a model that integrated a self-attention mechanism with weakly supervised learning to reveal distinct local feature variations in aerial forest fire images across various environments. The approach exhibited strong generalization capability and robustness, which was significant for improving the capacity, efficiency, and effectiveness of fire situation management and hazard response. It also played a crucial role in preventing forest wildfires.

PDF(4597 KB)

PDF(4597 KB)

PDF(4597 KB)

PDF(4597 KB)

PDF(4597 KB)

PDF(4597 KB)