PDF(3918 KB)

PDF(3918 KB)

UAV forestry land-cover image segmentation method based on attention mechanism and improved DeepLabV3+

ZHAO Yugang, LIU Wenping, ZHOU Yan, CHEN Riqiang, ZONG Shixiang, LUO Youqing

Journal of Nanjing Forestry University (Natural Sciences Edition) ›› 2024, Vol. 48 ›› Issue (4) : 93-103.

PDF(3918 KB)

PDF(3918 KB)

PDF(3918 KB)

PDF(3918 KB)

UAV forestry land-cover image segmentation method based on attention mechanism and improved DeepLabV3+

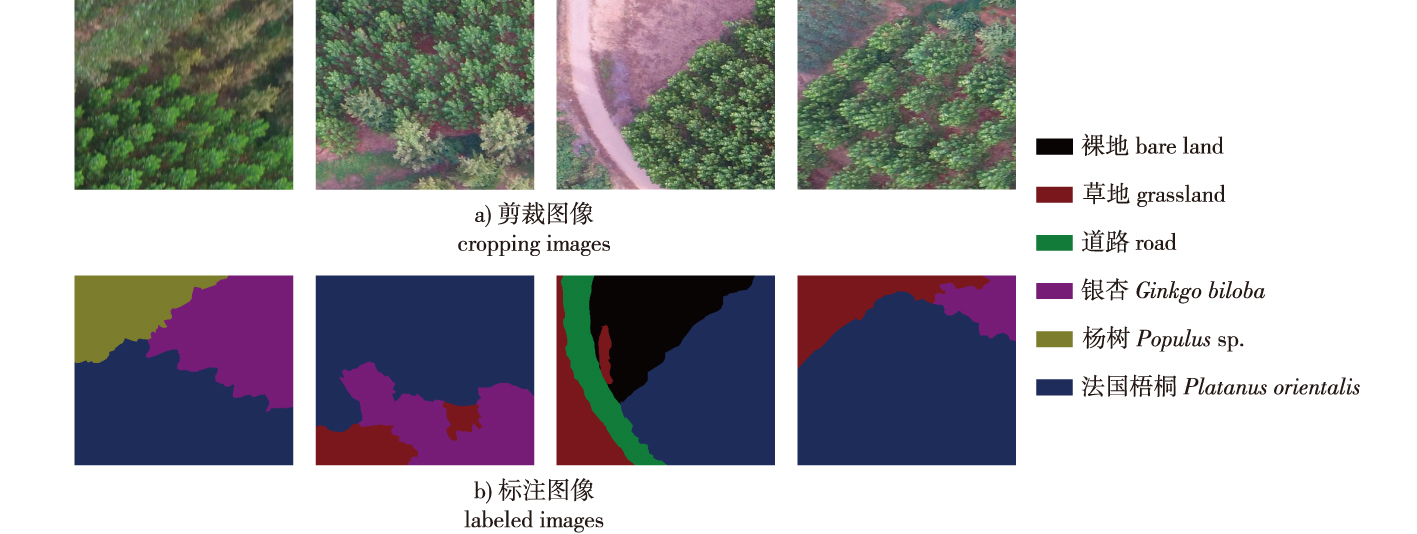

【Objective】This study proposes the feature segmentation method Tree-DeepLab for unmanned aerial vehicle (UAV) forest images, based on an attention mechanism and the DeepLabV3+ semantic segmentation network, to extract the main feature distribution information in forest areas.【Method】First, the forest images were annotated according to feature types from six categories (Platanus orientalis, Ginkgo biloba, Populus sp., grassland, road, and bare ground) to obtain the semantic segmentation datasets. Second, the following improvements were made to the semantic segmentation network: (1) the Xception network, the backbone of the DeepLabV3+ semantic segmentation network, was replaced by ResNeSt101 with a split attention mechanism; (2) the atrous convolutions of different dilation rates in the atrous spatial pyramid pooling were connected using a combination of serial and parallel forms, while the combination of the atrous convolution dilation rates was simultaneously changed; (3) a shallow feature fusion branch was added to the decoder; (4) spatial attention modules were added to the decoder; and (5) efficient channel attention modules were added to the decoder.【Result】Training and testing were performed based on an in-house dataset. The experimental results revealed that the Tree-DeepLab semantic segmentation model had mean pixel accuracy (mPA) and mean intersection over union (mIoU) values of 97.04% and 85.01%, respectively, exceeding those of the original DeepLabV3+ by 4.03 and 14.07 percentage points, respectively, and outperforming U-Net and PSPNet.【Conclusion】The study demonstrates that the Tree-DeepLab semantic segmentation model can effectively segment UAV aerial photography images of forest areas to obtain the distribution information of the main feature types in forest areas.

unmanned aerial vehicle(UAV) / land-cover image segmentation / forestry images / DeepLabV3+ / attention mechanism / ResNeSt

| [1] |

王静, 高建中. 林地地块特征对农户林业生产效率的影响[J]. 林业经济问题, 2021, 41(6):577-582.

|

| [2] |

|

| [3] |

|

| [4] |

|

| [5] |

|

| [6] |

赵庆展, 江萍, 王学文, 等. 基于无人机高光谱遥感影像的防护林树种分类[J]. 农业机械学报, 2021, 52(11):190-199.

|

| [7] |

戴鹏钦, 丁丽霞, 刘丽娟, 等. 基于FCN的无人机可见光影像树种分类[J]. 激光与光电子学进展, 2020, 57(10):36-45.

|

| [8] |

张军国, 冯文钊, 胡春鹤, 等. 无人机航拍林业虫害图像分割复合梯度分水岭算法[J]. 农业工程学报, 2017, 33(14):93-99.

|

| [9] |

张增, 王兵, 伍小洁, 等. 无人机森林火灾监测中火情检测方法研究[J]. 遥感信息, 2015, 30(1):107-110, 124.

|

| [10] |

刘文萍, 仲亭玉, 宋以宁. 基于无人机图像分析的树木胸径预测[J]. 农业工程学报, 2017, 33(21):99-104.

|

| [11] |

|

| [12] |

|

| [13] |

|

| [14] |

|

| [15] |

|

| [16] |

|

| [17] |

|

| [18] |

|

| [19] |

|

| [20] |

韩蕊, 慕涛阳, 赵伟, 等. 基于无人机多光谱影像的柑橘树冠分割方法研究[J]. 林业工程学报, 2021, 6(5):147-153.

|

| [21] |

刘文定, 田洪宝, 谢将剑, 等. 基于全卷积神经网络的林区航拍图像虫害区域识别方法[J]. 农业机械学报, 2019, 50(3):179-185.

|

| [22] |

徐辉, 祝玉华, 甄彤, 等. 深度神经网络图像语义分割方法综述[J]. 计算机科学与探索, 2021, 15(1):47-59.

|

| [23] |

|

| [24] |

|

| [25] |

|

| [26] |

|

| [27] |

|

| [28] |

|

| [29] |

|

| [30] |

李丹, 张俊杰, 赵梦溪. 基于FCM和分水岭算法的无人机影像中林分因子提取[J]. 林业科学, 2019, 55(5):180-187.

|

| [31] |

刘旭光, 肖啸, 兰玉彬, 等. 应用可见光遥感影像的林区植被分割方法[J]. 东北林业大学学报, 2023, 51(4):62-67.

|

/

| 〈 |

|

〉 |