PDF(4597 KB)

PDF(4597 KB)

Method for aerial forest fire image recognition based on self-attention mechanism

WANG Junling, FAN Xijian, YANG Xubing, YE Qiaolin, FU Liyong

Journal of Nanjing Forestry University (Natural Sciences Edition) ›› 2025, Vol. 49 ›› Issue (2) : 194-202.

PDF(4597 KB)

PDF(4597 KB)

PDF(4597 KB)

PDF(4597 KB)

Method for aerial forest fire image recognition based on self-attention mechanism

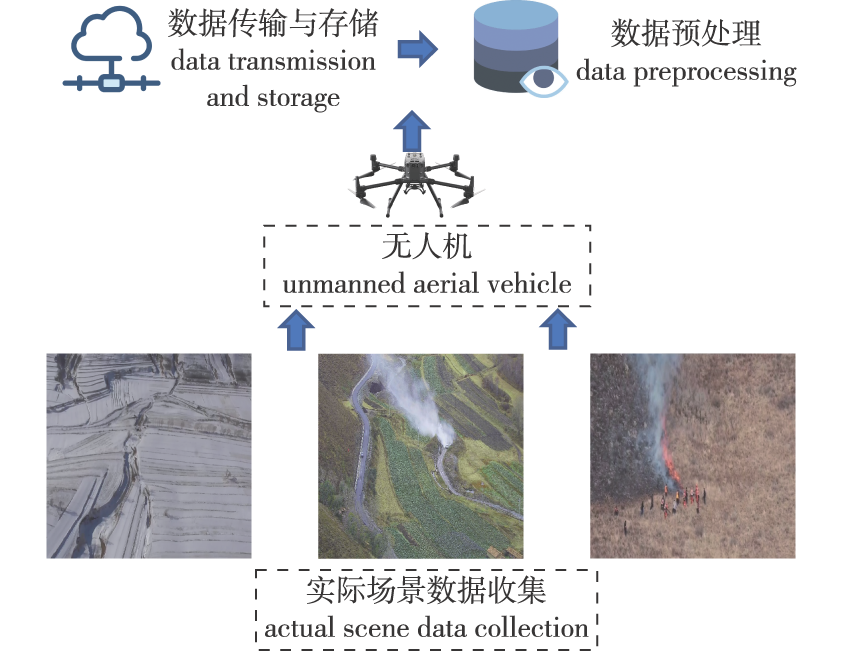

【Objective】This study aims to address the challenges of small fire point targets and complex environments in aerial forest fire images, we propose FireViT, a self-attention-based image recognition method. 【Method】This method aims to enhance the accuracy and robustness of aerial forest fire image recognition. We used forest fire videos collected by drones in Chongli District, Zhangjiakou City, to construct a dataset through data preprocessing. A 10-layer vision transformer (ViT) was selected as the backbone network. Images were serialized using overlapping sliding windows, with embedded positional information fed into the first layer of ViT. The region selection modules, extracted from the preceding nine layers of ViT, were integrated into the tenth layer through multi-head self-attention and multi-layer perceptron mechanisms. This effectively amplified minor differences between subgraphs to capture features of small targets. Finally, a contrastive feature learning strategy was employed to construct an objective loss function for model prediction. We validated the model’s effectiveness by establishing training and testing sets with sample ratios of 8∶2, 7∶3, 6∶4, and 4∶6, and compared its performance with five classical models.【Result】With the allocation ratio of four training and test sets, the model achieved a recognition rate of 100% and accuracy of 94.82%, 95.05%, 94.90%, and 94.80%, respectively, with an average accuracy of 94.89%. This performance surpassed that of the five comparison models. The model converged rapidly, maintained a high recognition accuracy rate, and demonstrated stability in subsequent iterations. It showed strong generalization ability. The recognition rates were 99.97%, 99.89%, 99.80% and 99.77%, also higher than the five comparison models.【Conclusion】This research employed a model that integrated a self-attention mechanism with weakly supervised learning to reveal distinct local feature variations in aerial forest fire images across various environments. The approach exhibited strong generalization capability and robustness, which was significant for improving the capacity, efficiency, and effectiveness of fire situation management and hazard response. It also played a crucial role in preventing forest wildfires.

aerial forest fire image / self-attention mechanism / fine grained classification / vision transformer / forest fire prevention / unmanned aerial vehicles (UAV) / Chongli District of Zhangjiakou City

| [1] |

|

| [2] |

|

| [3] |

蔡茂, 刘芳. 基于细粒度图像分类算法的新冠CT图像分类[J]. 吉林大学学报(信息科学版), 2023, 41(4):676-684.

|

| [4] |

|

| [5] |

张俊威. 基于深度学习的图像分割方法[J]. 数字技术与应用, 2023, 41(3):120-122,154.

|

| [6] |

金燕, 薛智中, 姜智伟. 基于循环残差卷积神经网络的医学图像分割算法[J]. 计算机辅助设计与图形学学报, 2022, 34(8):1205-1215.

|

| [7] |

|

| [8] |

|

| [9] |

袁翔, 程塨, 李戈, 等. 遥感影像小目标检测研究进展[J]. 中国图象图形学报, 2023, 28(6):1662-1684.

|

| [10] |

魏铭辰, 刘立波, 王晓丽. 2020—2021年宁夏野生鸟类细粒度分类研究图像数据集[J]. 中国科学数据(中英文网络版), 2022, 7(3):142-148.

|

| [11] |

解丹, 陈立潮, 曹玲玲, 等. 基于卷积神经网络的车辆分类与检测技术研究[J]. 软件工程, 2023, 26(4):10-13.

|

| [12] |

|

| [13] |

|

| [14] |

|

| [15] |

朱张莉, 饶元, 吴渊, 等. 注意力机制在深度学习中的研究进展[J]. 中文信息学报, 2019, 33(6):1-11.

|

| [16] |

|

| [17] |

赵国川, 王姮, 张华, 等. 基于完全自注意力的水电枢纽缺陷识别方法[J]. 计算机工程, 2022, 48(9):277-285.

|

| [18] |

|

| [19] |

|

| [20] |

|

/

| 〈 |

|

〉 |