PDF(2638 KB)

PDF(2638 KB)

Visible forest fire detection using SBP-YOLOv7

ZHANG Xiaowen, ZHANG Fuquan

Journal of Nanjing Forestry University (Natural Sciences Edition) ›› 2025, Vol. 49 ›› Issue (3) : 103-109.

PDF(2638 KB)

PDF(2638 KB)

PDF(2638 KB)

PDF(2638 KB)

Visible forest fire detection using SBP-YOLOv7

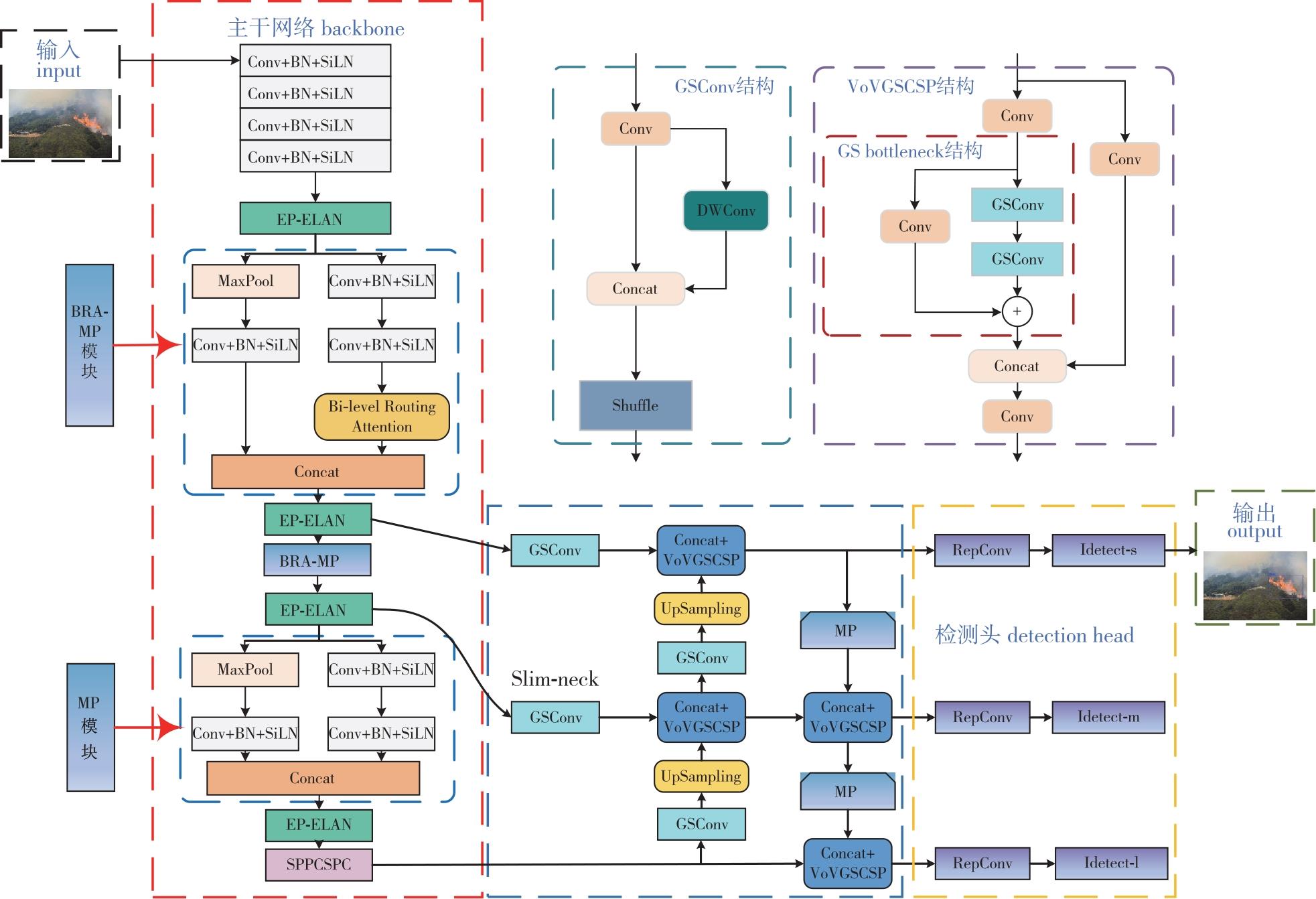

【Objective】Forest fires pose a significant threat to the natural environment and human safety, thus timely and accurate detection of fire sources is demanding. However, the complex forest environment characterized by high tree density, ground litter accumulation, and dense canopies creates substantial challenges for effective fire detection. To address these issues, this study proposes a novel forest fire detection method, SBP-YOLOv7.【Method】The proposed method incorporated three key innovations. First, an attention mechanism-enhanced downsampling module (BRA-MP) was introduced to improve feature recognition during downsampling, enhancing the model’s ability to detect small targets by boosting feature representation and semantic relevance. Second, the extended partial convolution efficient layer aggregation module (EP-ELAN) was integrated into the model’s backbone, effectively reducing redundant computations and minimizing model parameters. Finally, a Slim-neck neck module was employed for feature fusion, ensuring high accuracy while lowering computational costs. 【Result】Comparative evaluations on a forest fire dataset demonstrate that the SBP-YOLOv7 model achieves an AP score of 87.0%, representing 2.3% improvement over the original YOLOv7. Additionally, the model reduces parameter count by 22.77% and computational cost by 17.13%.【Conclusion】Compared with the traditional YOLOv7 algorithm, the proposed SBP-YOLOv7 model offers superior accuracy and efficiency, enabling faster and more precise detection of forest fires even in challenging environments.

deep learning / forest fire detection / forest scenes / YOLOv7 / attention mechanism

| [1] |

|

| [2] |

|

| [3] |

|

| [4] |

|

| [5] |

岳超, 罗彩访, 舒立福, 等. 全球变化背景下野火研究进展[J]. 生态学报, 2020, 40(2):385-401.

|

| [6] |

|

| [7] |

何乃磊, 张金生, 林文树. 基于深度学习多目标检测技术的林火图像识别研究[J]. 南京林业大学学报(自然科学版), 2024, 48(3):207-218.

|

| [8] |

|

| [9] |

|

| [10] |

|

| [11] |

|

| [12] |

|

| [13] |

向俊, 严恩萍, 姜镓伟, 等. 基于全卷积神经网络和低分辨率标签的森林变化检测研究[J]. 南京林业大学学报(自然科学版), 2024, 48(1):187-195.

|

| [14] |

|

| [15] |

|

| [16] |

|

| [17] |

|

| [18] |

|

| [19] |

|

| [20] |

|

| [21] |

|

/

| 〈 |

|

〉 |